Double Deep Q-Learning (DDQN)

Using Deep RL to land a Lunar Module

In a world with so many ML milestones being broken, few areas capture my attention as much as Reinforcement Learning. In this project, I implemented Double Deep Q-Network (DDQN), a reinforcement learning algorithm introduced by DeepMind in their seminal paper “Deep Reinforcement Learning with Double Q-learning”.

DDQN is an extension of the original DQN algorithm that addresses the problem of overestimation bias in Q-learning. The key idea behind DDQN is to decouple the selection of the action from its evaluation. This is achieved by using two separate networks: one for selecting the best action and another for estimating its value.

The theory behind DDQN can be summarized as follows:

- Maintain two Q-networks: a primary network and a target network.

- Use the primary network to select the best action.

- Use the target network to estimate the Q-value of that action.

- Periodically update the target network with the weights of the primary network.

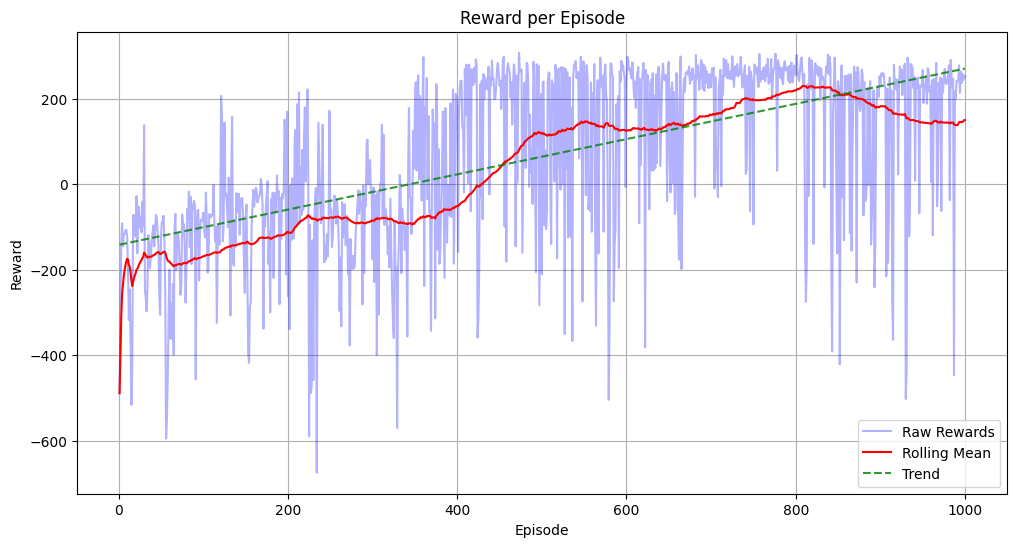

I applied this DDQN algorithm to the Lunar Lander environment from OpenAI Gym, a complex control task where an agent must learn to safely land a spacecraft on the moon’s surface. The results were impressive, with the agent learning to consistently land the module after several hundred episodes of training.

You can find the full implementation and detailed results in my GitHub repository, as well as in the ‘repos’ section of my website. The code includes the DDQN implementation, training loop, and visualization of the learning progress.

The results indicate that the optimal training duration is approximately 800 episodes. Beyond this point, a decline in performance suggests overfitting. It’s worth noting that at this stage, the mean rewards per episode consistently exceed 200, which aligns with OpenAI’s definition of having ‘solved’ the environment:

The usage is quite simple, all of it being done through a jupyter notebook: