Turning your Room into a 3D Scanner - Radiometry

Using uncalibrated photometric stereo to construct 3D surfaces of items at home.

3D Scanning might sound complicated, but really all you need is a camera and a strong light source. In this post, I’ll show you how to turn your room into a 3D scanner using unstructured light, and dive into the math behind this cool technique called Photometric Stereo.

How Does it Work?

This method is a product of “physics-based” vision, and it works by carefully reasoning about the mechanics of how light travels and interacts with objects in a scene. More specifically, we use results from Radiometry and Reflectance in a model with assumptions described in the section below.

The Setup

The setup is relatively simple. All you need is a camera, an object to photograph and a strong light source. Ideally, the camera should be orthographic, but in practice you can pick your highest focal length lens and place it as far as you can from the object while ensuring that there’s enough light to image the scene.

3D Reconstruction

Before we can address 3D scanning, we need to cover the basics of how light interacts with a scene and set out base assumptions.

Radiometry

This is the study of how electromagnetic radiation is measured. In our case, we are only dealing with visible light.

At its core, we model light as photons that can be described as \((x, ~\vec{d},~ \lambda)\), with \(x\) being its position, \(\vec{d}\) its direction, and \(\lambda\) its wavelength. Each photon has some energy determined by its wavelength, Planck’s constant and the speed of light in a vacuum. Therefore, to measure the energy of light, we just need to count the photons, which is exactly what a camera does!

With this simple defintion, we can define many interesting quantities. For our application, we mainly care about the Radiance, but we should also mention Irradiance and Flux:

Flux

\(\Phi(A)\)

The total amount of light energy per unit time \([W]\)

Irradiance

\(E(x) = \frac{d\Phi(A)}{dA(x)}\)

The power of light incident per unit area \([W/m^2]\)

Radiance

\(L(x, \vec{\omega}) = \frac{d^2\Phi(A)}{dAd\vec{\omega}\cos\theta}\)

The power of light per unit area per solid angle \([W/m^2/sr]\)

Reflectance and BRDFs

The way in which light interacts with a material (in the way of its reflections) is described through a Bidirectional Reflectance Distribution Function (BRDF). This function, which we’ll call \(f\), is the ratio of outgoing light in the direction \(\vec{\omega_o}\) (radiance) and incoming light from direction \(\vec{\omega_i}\) (irradiance). Thus, the total reflected light in any direction is given by integrating over all incoming directions:

\[L_o(x, \vec{\omega_o}) = \int_{H^2} f(x,\vec{\omega_i}, \vec{\omega_o}) L_i(x, \vec{\omega_i}) \cos\theta_i d\vec{\omega_i}\]For our 3D scanning setup, we use the simplest BRDF: the Lambertian model. This assumes that the surface reflects light equally in all directions, in other words, the BRDF is a constant, independent of \(x, \omega_i\) and \(\omega_o\):

\[f(x,\vec{\omega_i}, \vec{\omega_o}) = \frac{\rho}{\pi}\]where \(\rho \in [0,1)\) is the surface albedo, determining how much of the incoming light is reflected out.

Imaging Model: “n dot l”

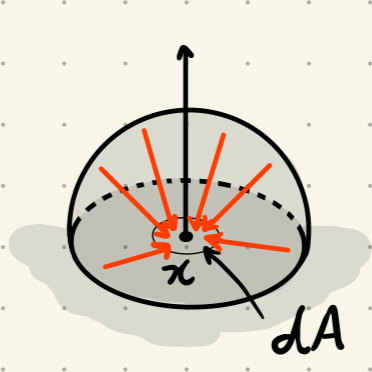

For the latter part of this derivation, it will be helpful to visualize what the light interactions look like. We can see in the diagram below the incoming light with direction \(\vec{\omega_i}\) reflect off of a point \(x\) in direction \(\vec{\omega_o}\). Note the angle \(\theta_i\) between the incident ray and what is called the surface normal \(\hat{n}\), at \(x\). This is a unit vector perpendicular to the surface of the object.

With the assumption of a Lambertian BRDF, our imaging model is given by

\[L_o(x, \vec{\omega_o}) = \frac{\rho}{\pi}\int_{H^2}L_i(x, \vec{\omega_i}) \cos\theta_i d\vec{\omega_i}\]Before we get scanning, we need to make some additional important assumptions about our scene:

1. Far Field Lighting

All points \(x\) in the scene receive equal energy from incoming light. This means \(L(x,\vec{\omega})\) simplifies to \(L(\vec{\omega})\).

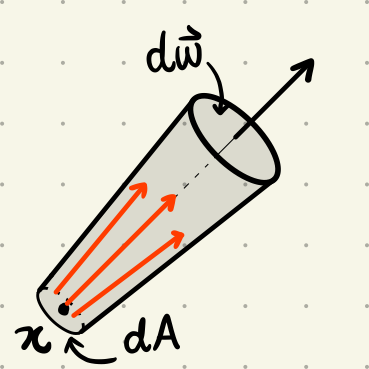

2. Directional Lighting

Light travels in a single direction, represented by vector \(\vec{l}\) with strength \(\|\vec{l}\|\) and direction \(\frac{\vec{l}}{\|\vec{l}\|}\).

3. Orthographic Camera

No perspective effects from the camera.

4. Lambertian BRDF

Object's surface is Lambertian with constant BRDF.

5. No Interreflections

Light does not bounce between surfaces; all measured light comes directly from source.

With these assumptions, we can revisit our imaging model. Firstly, we can express the incoming light as a Dirac delta:

\[L_i(x,\vec{\omega_i}) = L_i(\vec{\omega_i}) = \|\vec{l}\|\delta\left(\vec{\omega_i}-\frac{\vec{l}}{\|\vec{l}\|}\right)\]That is, under the directional lighting assumption, all light in the scene must come from direction \(\frac{\vec{l}}{\|\vec{l}\|}\) with strength \(\|\vec{l}\|\). Substituting this into our imaging model,

\[L_o(\vec{\omega_o})=\frac{\rho}{\pi}\int_{H^2}\|\vec{l}\|\delta\left(\vec{\omega_i}-\frac{\vec{l}}{\|\vec{l}\|}\right) \cos\theta_i d\vec{\omega_i}\]Then, by the definition of the Dirac delta, the integral collapses to the single incoming ray

\[L_o(\vec{\omega_o}) = \frac{\rho}{\pi}\|\vec{l}\|\cos(\theta_i)\]Finally, since \(\hat{n}\) is a unit vector, \(\|l\|\cos(\theta_i) = \hat{n}^\top\vec{l}\), and as so, the light \(L_o\) from \(\vec{\omega_o}\) is measured as

\[L_o(\vec{\omega_o}) = \frac{\rho}{\pi}\hat{n}^\top\vec{l} = a\hat{n}^\top\vec{l}\]We have now shown that under these assumptions, our imaging model is expressed cleanly as

\[I = a\hat{n}^\top\vec{l}\]Next, we’ll see how to recover the normals and albedos from our scene, and from that, depth.

Surface Normals and Depth

As we’ve just seen, the “n dot l” model allows us to represent the image as a linear function of normals and light. This means that with multiple images, we can form a linear system:

\[\begin{align*} I_1 &= a\hat{n}^\top \vec{l_1}\\ I_2 &= a\hat{n}^\top \vec{l_2}\\ & \vdots\\ I_N &= a\hat{n}^\top \vec{l_N}\end{align*}\]Where \(I_1, \ldots, I_N\) are pixels from linear luminance images (think the Y channel in XYZ color space).

With this system, knowing \(\vec{l}\) allows us to recover \(\hat{n}\) directly using a ‘pseudo-normal’ \(b = a\hat{n}\). In fact, a well-calibrated system requires only three light directions to determine it.

However, calibrating for the light directions can be difficult, usually requiring mirror-surface spheres to pinpoint the direction of incoming light. Fortunately, we can use some more linear algebra to perform Photometric Stereo in an uncalibrated setting.

Let’s first look at how we can represent a single image in matrix form. For an image with \(P\) pixels, we can flatten it into a vector \(I_1 \in \mathbb{R}^P\). Similarly, we can represent the pseudo-normals for all pixels as a matrix \(B \in \mathbb{R}^{3\times P}\), where each column contains the pseudo-normal for one pixel. With a single light direction \(\vec{l_1} \in \mathbb{R}^3\), we can write:

\[I_1 = \vec{l_1}^\top B\]If we instead have \(N\) different lighting directions, we can stack \(N\) images into a matrix \(I \in \mathbb{R}^{N\times P}\) and the light directions into a matrix \(L \in \mathbb{R}^{3\times N}\). This gives us the compact form:

\[I = L^\top B\]Where each row of \(I\) is a flattened image, each column of \(L\) is a light direction, and \(B\) remains the pseudo-normal matrix. This means that under our assumptions about the scene’s lighting and reflectance properties, the matrix \(I\) must have rank 3.

We can now turn to an old friend in Linear Algebra, SVD, to decompose our captured, uncalibrated sequence \(I\) and use its rank 3 decomposition as our \(L\) and \(B\) matrices. With \(B\) recovered, we can easily retrieve the pseudornormals \(b\) at each pixel, from which we recover the albedos \(\rho\) and normals \(\hat{n}\).

All that is left is to turn the normal information into depth. Thankfully (under an orthographic camera model) this is fairly simple. The normal is equal to

\[\hat{n} = \begin{bmatrix}\frac{\partial D}{\partial x} & \frac{\partial D}{\partial y} & -1\end{bmatrix}\]Where \(D\) is the depth image. As such, all we need to do is to integrate the normal field in order to retrieve the depth image, which we can do with standard integration algorithms such as Poisson Integration (a common approach to reconstructing images from gradients). Note: this process is actually more involved, and it requires enforcing integrability for the normal field.

With all the work layed out, all that is left is to capture our object under different light sources and solve a linear system.

To bring everything together, let’s recap the steps presented here:

- Capture \(N \geq 3\) luminance images of a scene from different light source positions

- Create a matrix \(I\) with the \(N\) images and perform SVD on it to retrieve the pseudo-normals \(B\)

- Store the magnitude of each column of \(B\) (albedos) and normalize them (normals)

- Enforce integrability and integrate the normal field to retrieve depth

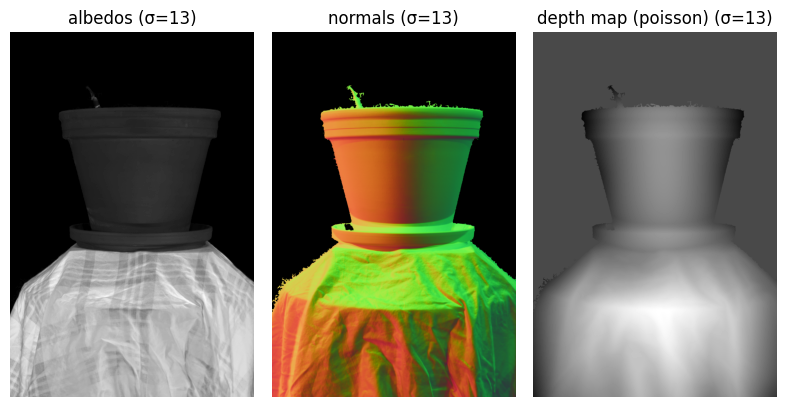

Results

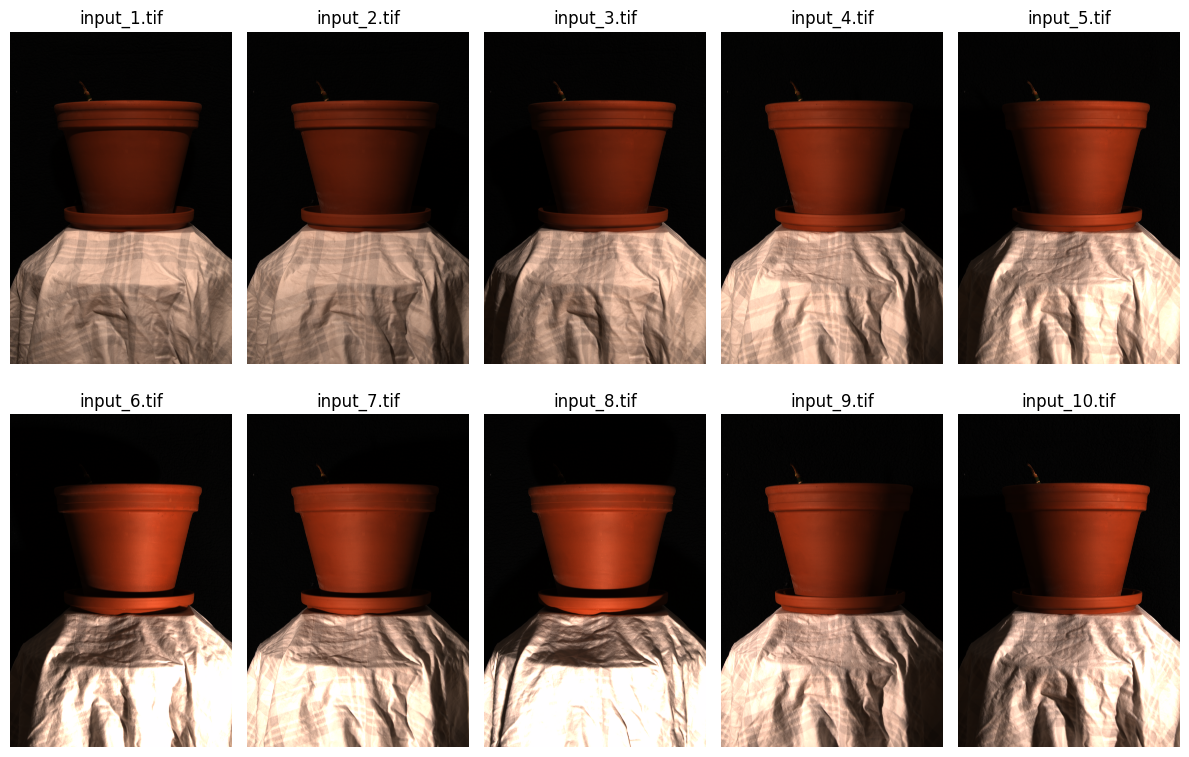

We can capture images from different lighting directions and follow the steps outlined above to get the 3D shape of objects!

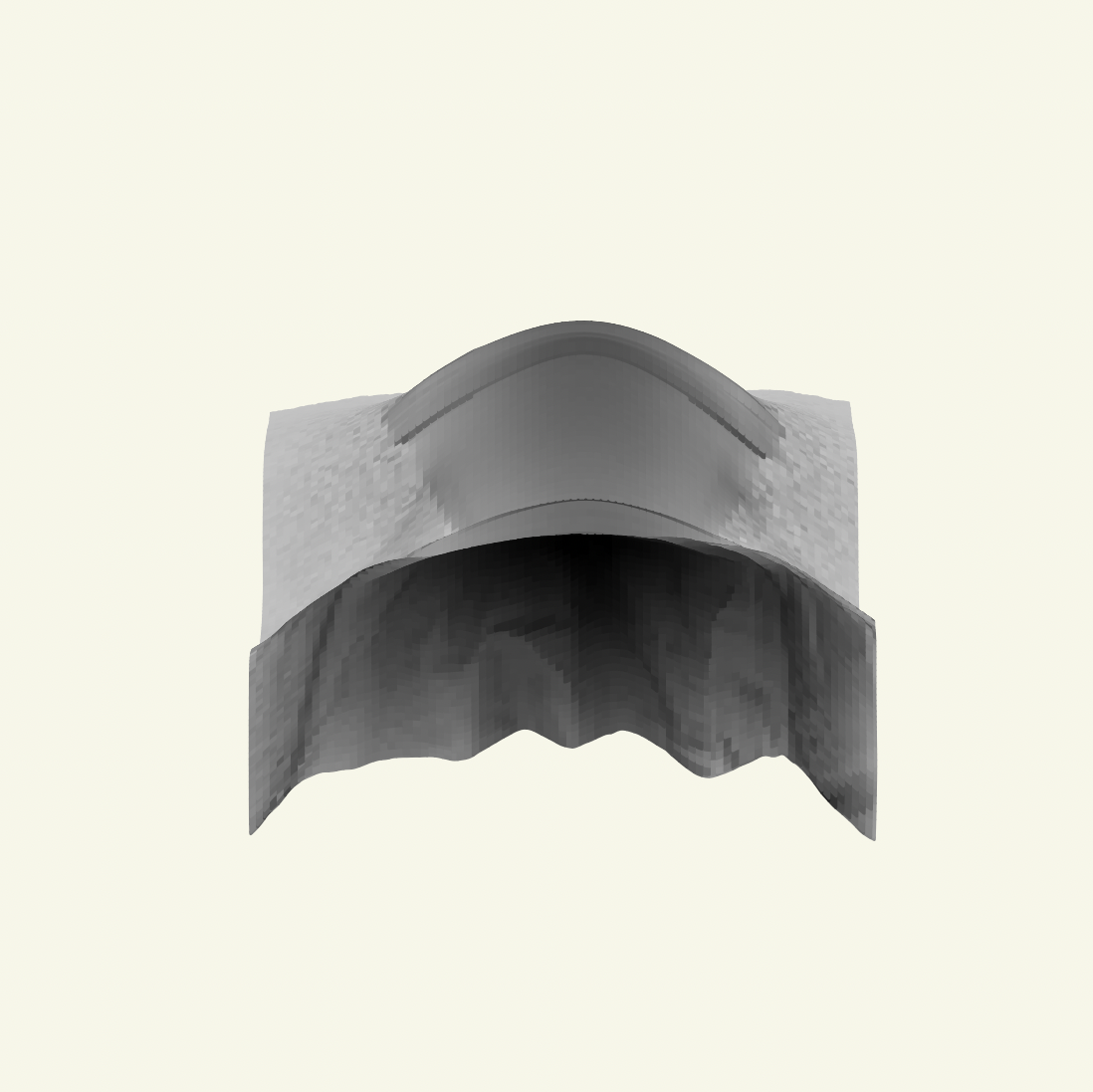

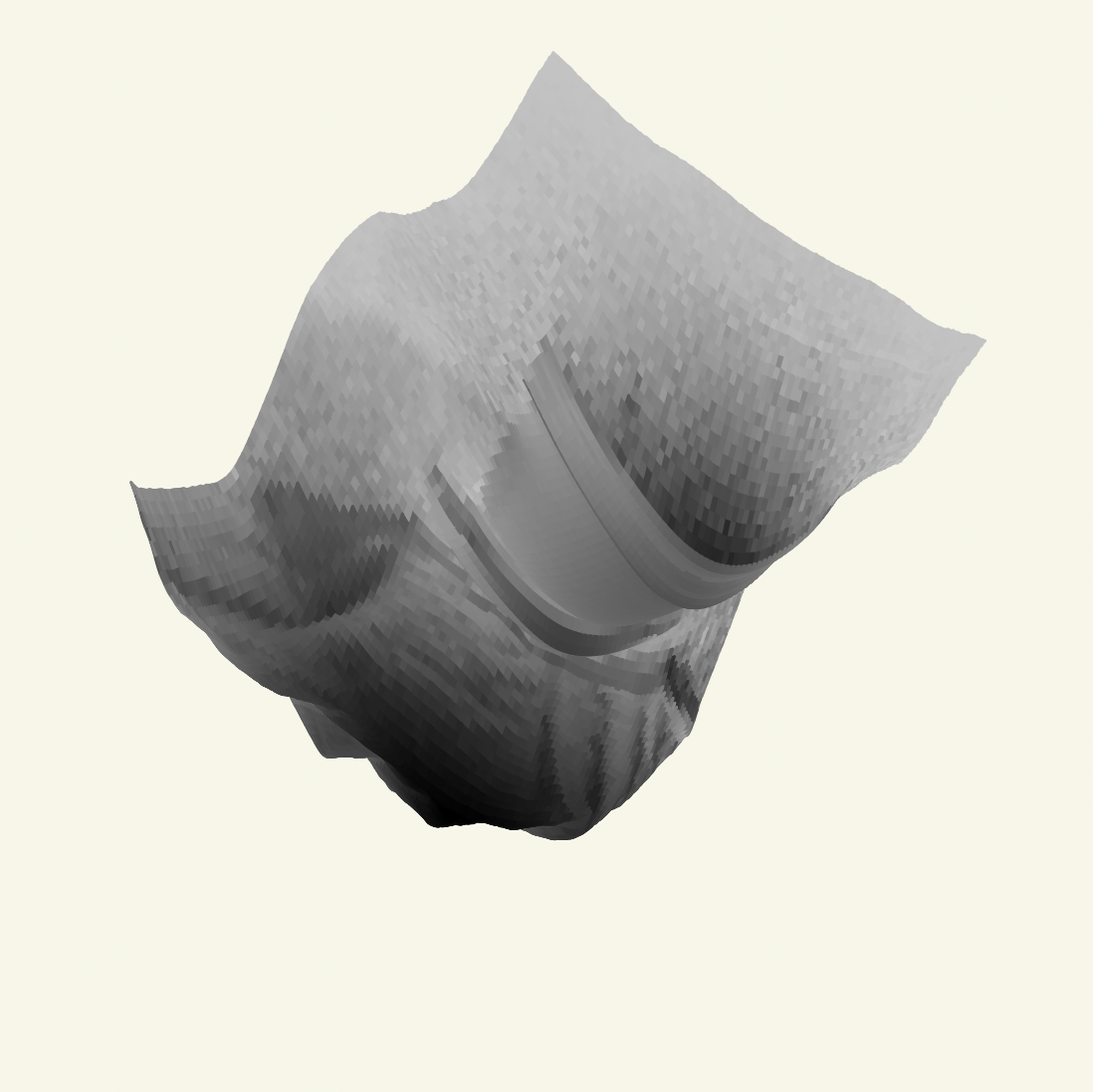

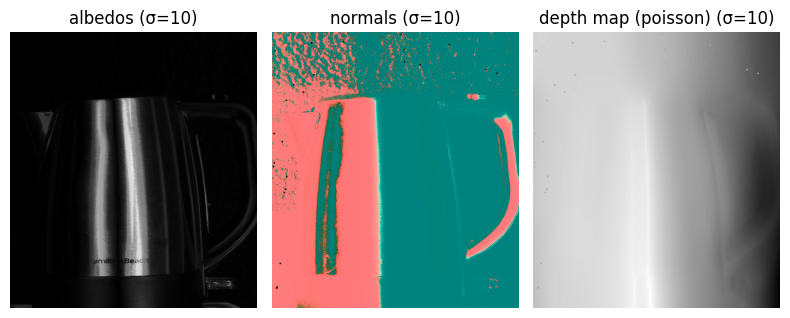

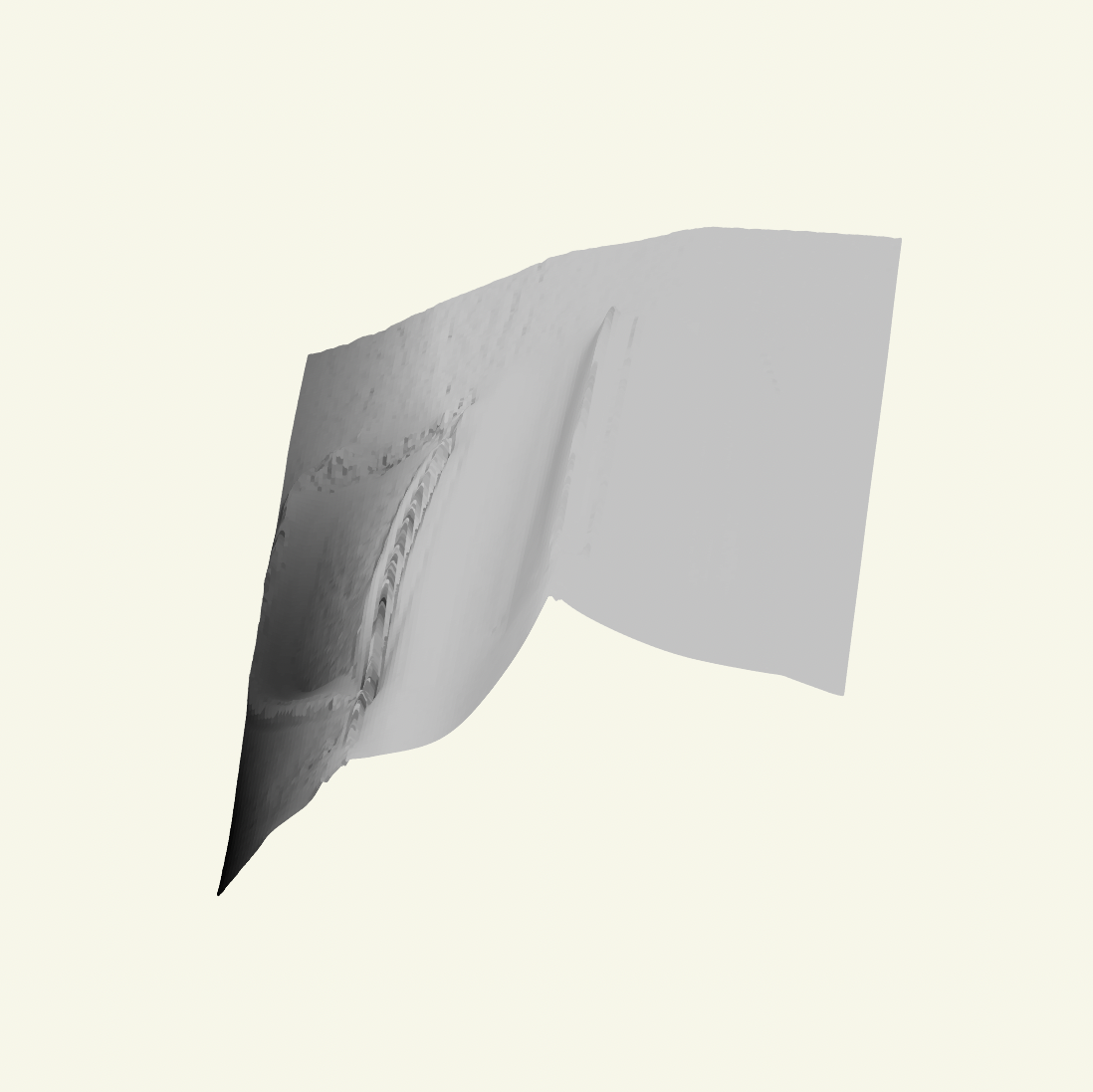

Note the relatively uniform albedo throughout the pot. To its right, the normal map reveals normals moving in direction around the pot, as expected. The depth map to its right in fact confirms this: The depth is smaller at the center, closer to the camera, and gradually increases towards the edges of the pot. We can inspect the results more closely by turning the results into a 3D mesh:

The presented shape of the scene isn’t perfect, but still decently good for a home setup. The side profile view of the pot shows surprisingly good detail in the reconstruction of the pot.

Challenges, Solutions and Limitations

As previously discussed, this method uses several assumptions that may not always be true. Even if we set up our scanning to satisfy these assumptions, other problems can come up, such as the Bas-Relief ambiguity.

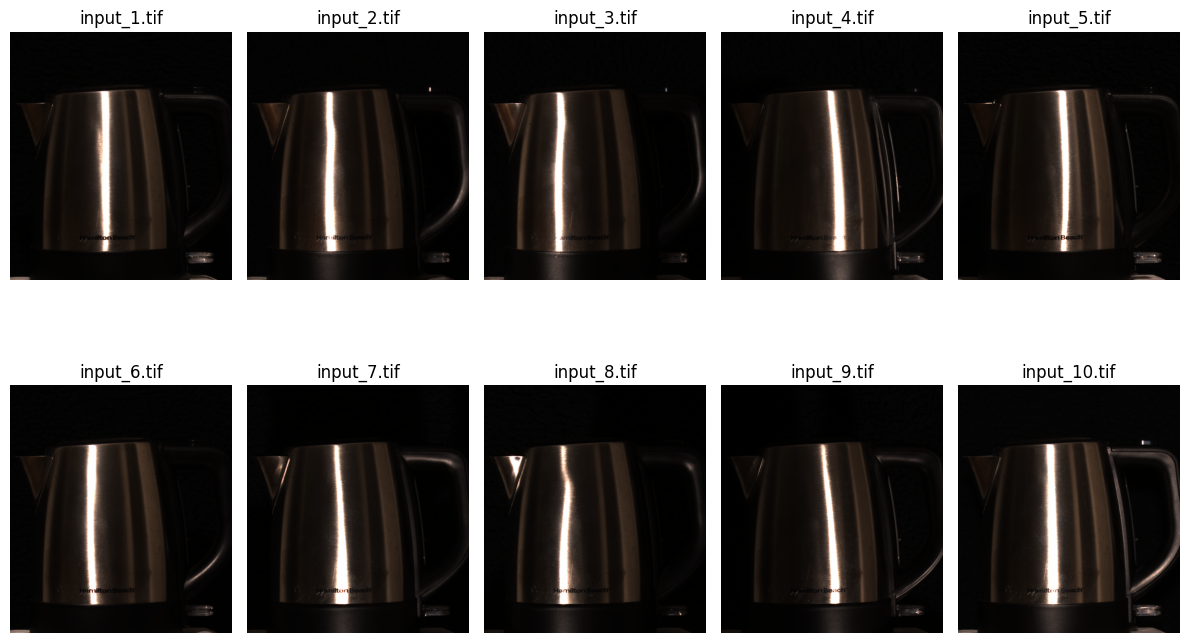

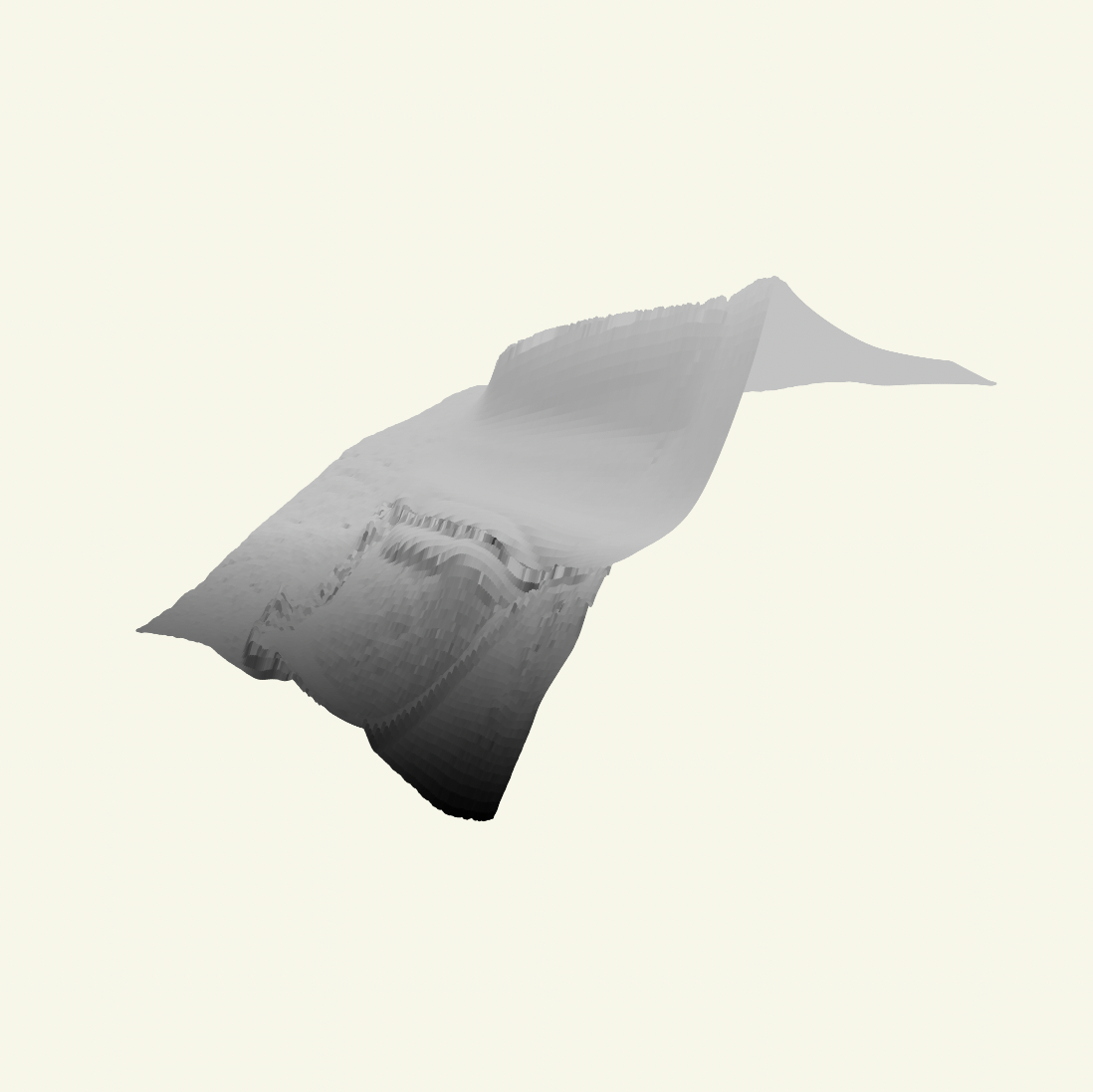

One of the cornerstone assumptions for this method to work is that the object we scan has a Lambertian surface. To illustrate what happens if that doesn’t hold, look at the example below, of Photometric Stereo being used in an object with reflective surface (an electric kettle):

This object no longer satisfies the “n dot l” model, and as such the process doesn’t work. We see inaccurate sharp edges near the center, and very little and inconsistent shape otherwise.

To address some of these issues, check out 3D Scanning with Structured Light! Instead of working with complicated physics of light transport, it works with simple geometry instead.

Build Your Own!

Despite some complicated math, the actual setup for this process is very simple!

Want to do it yourself? All you need is:

- A camera that shoots RAW

- A light source you can move around

- Your favorite Lambertian object

To get good results, try as best as you can to satisfy the orthographic camera, directional light and far field assumptions. You can do this by using the largest focal length lens you have and setting the camera far from the object being scanned. To avoid interreflections, use a diffuse background. Happy scanning! 📷