Turning your Room into a 3D Scanner - Triangulation

Using structured light (shadows) to create dense 3D point clouds at home.

3D Scanning with structured light sounds very complex, but all you really need is a camera, a strong light source, and your favorite stick. In this post, I’ll show you how to turn your room into a 3D scanner using structured light triangulation - specifically, using shadows as our “structured light.”

How Does it Work?

The key principle is triangulation: when a shadow falls on an object, we know that any point in the shadow must lie on the plane created by the light source and the edge casting the shadow. By tracking where shadows cross each point on the object and knowing the geometry of these shadow planes, we can determine the 3D position of each point through triangulation.

The Setup

The setup includes a point light source, a camera and a stable place to set up your scene. It is important that your setup doesn’t move since the extrinsic calibration (covered in the next section) is necessary for this process to work.

3D Reconstruction

The general framework as suggested by the demo above consists of three main steps. First, we calibrate the scene to establish the relationship between the camera and world coordinates. Then, we detect the shadow lines as they move across the scene by analyzing changes in pixel intensities. Finally, we use triangulation - finding the intersection between the camera ray for each pixel and the detected shadow planes - to recover the 3D position of points in the scene. Let’s dive into each of these steps in detail.

1. Calibration

Before we get to capturing the frames we’ll use for the 3D Reconstruction, we need to calibrate the scene. Unlike Phtometric Stereo which used shading and reflection, this method relies on geometric relationships. For accurate 3D reconstruction, we need precise mapping between the 2D image space and 3D world space. This requires two types of calibration:

Intrinsic Camera Calibration: This determines how 3D points in the camera’s coordinate system map to 2D pixels in the image. It accounts for parameters like focal length and optical center. This is simple enough, and we can use something like opencv’s pre-built calibration functions to retrieve the intrinsic matrix:

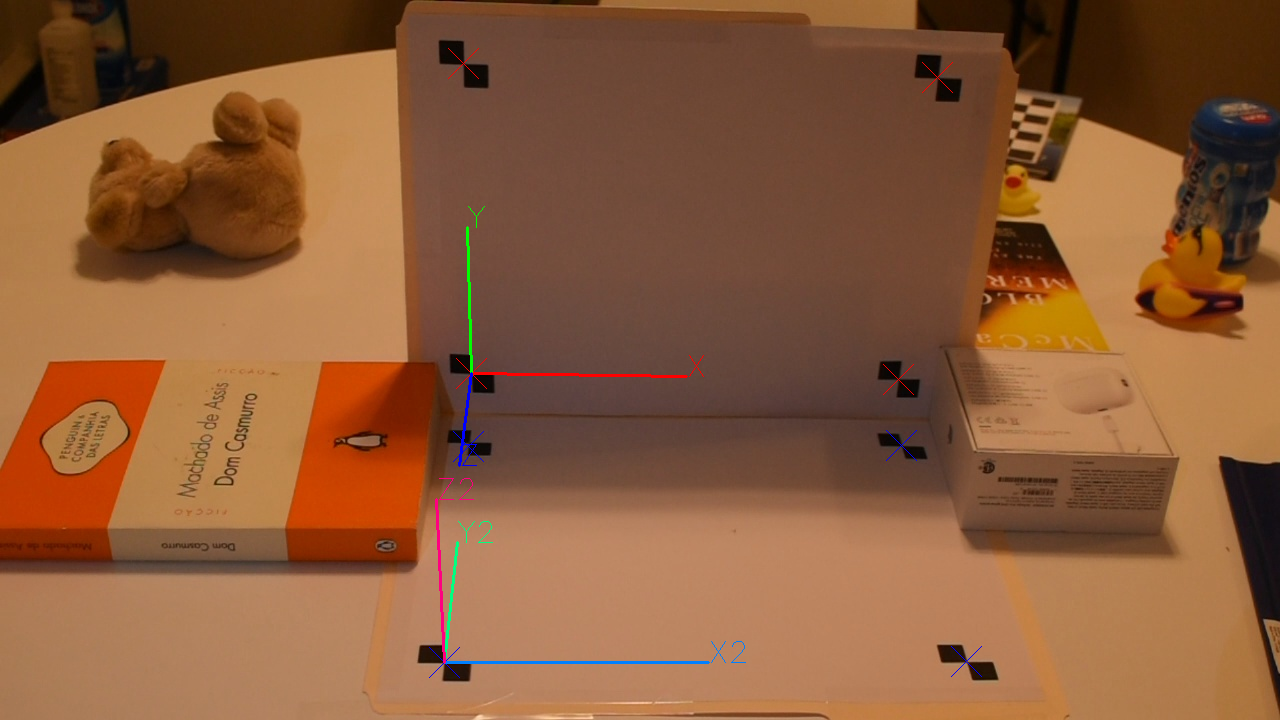

Extrinsic Scene Calibration: This establishes how points in the world coordinate system relate to the camera’s coordinate system through rotation and translation matrices. We do this by placing anchors in known positions into the scene, which we can use alongisde the pre-computed instrinsics matrix to compute the rotation and translation between the planes defined by the anchor points and the camera:

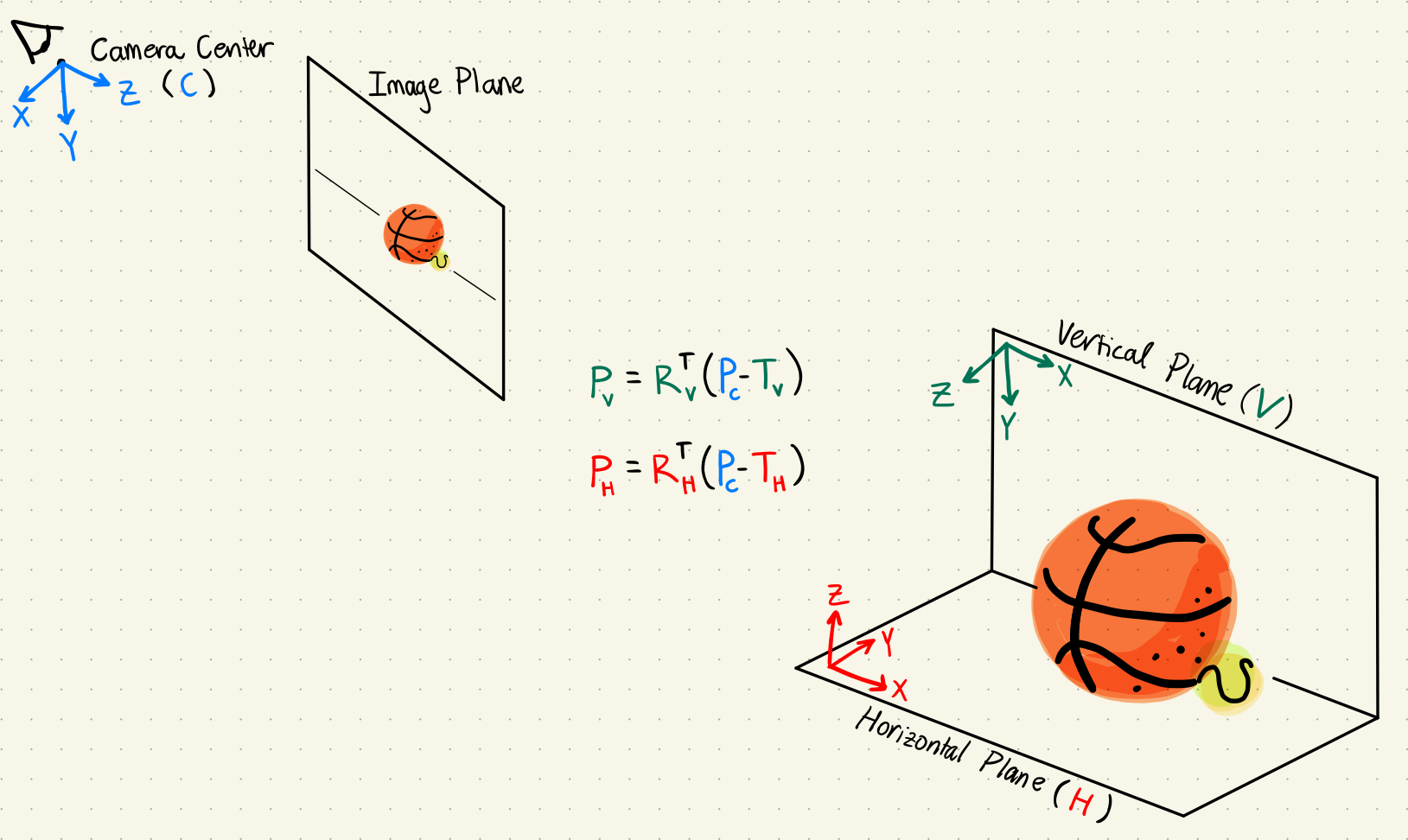

World Model: With the calibration step done, we now have three coordinate systems: The Camera’s, the Horizontal Plane’s and the Vertical Plane’s. What this means in practice is that we have two important matrix-vector pairs mapping points between the Camera’s and Planes’ coordinate systems: \(R_h,~ T_h\) for the horizontal plane, and \(R_v,~ T_v\) for the vertical plane.

To convert a point \(P_c\) from the camera’s coordinate system to the horizontal plane’s coordinates \(P_h\), for example, we need to translate the point relative to the camera’s center to that of the plane, and then rotate it appropriately: \(P_h = R_h^T(P_c - T_h)\).

These transformations will be crucial when we need to express shadow planes detected in the camera’s coordinates in terms of our calibrated world planes.

2. Shadow Detection

Once calibrated, we capture a video sequence of a stick’s shadow moving across the scene. The key is detecting exactly when the shadow crosses each pixel. We do this by:

- Finding Shadow Crossings: For each pixel, we track when its intensity drops significantly below a pre-defined threshold - this indicates a shadow crossing. By finding these crossing times for each pixel, we create a “time-of-crossing” map:

- Shadow Line Fitting: For each frame, we fit a line to the pixels in the shadow crossings to get the shadow line parameters \(a, b, c\) in the form \(ax + by + c = 0\).

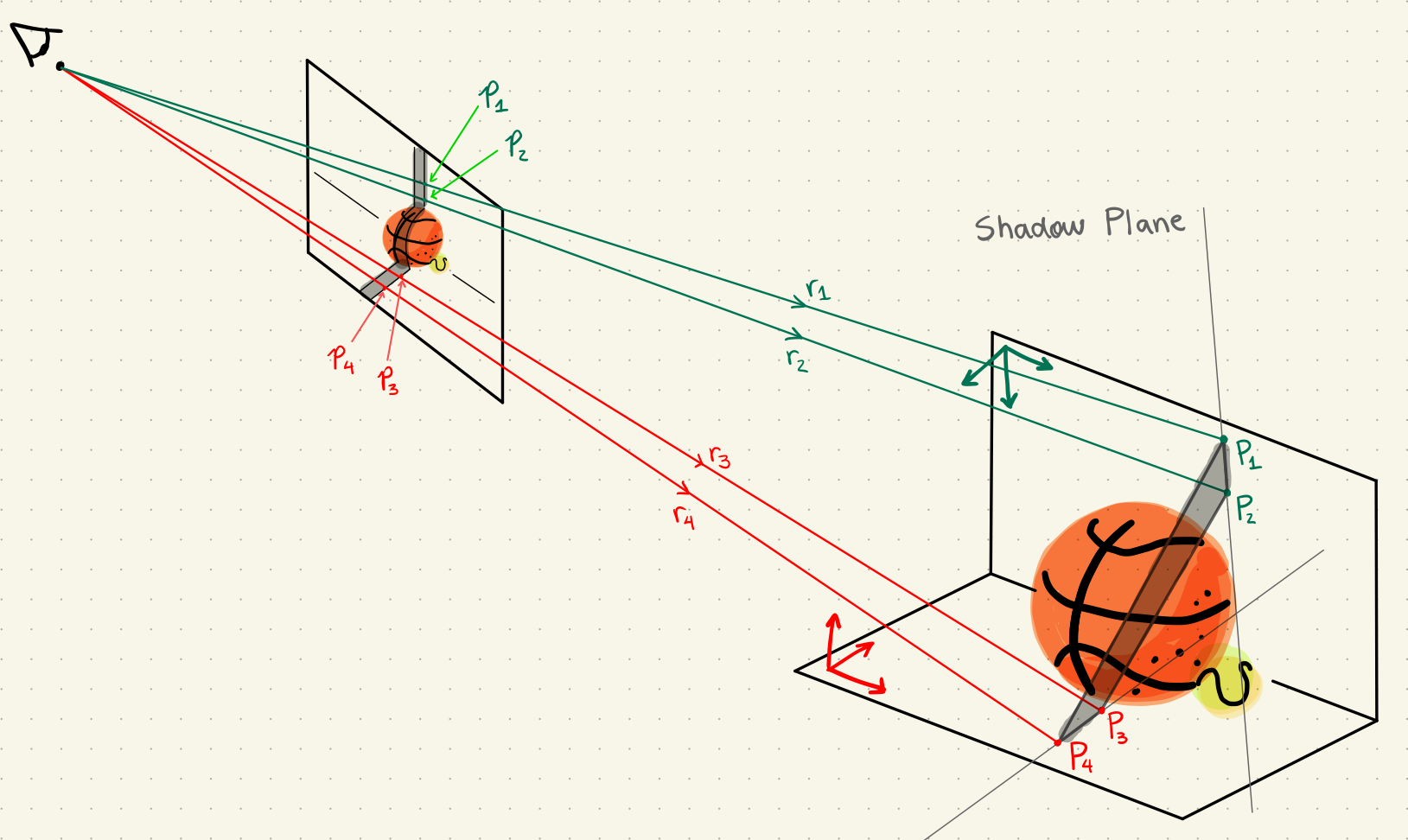

Using the calibration transformations, we can backproject two points in each line segment into a ray. The intersection of these rays with the corresponding vertical and horizontal planes are four points defining the shadow plane:

As shown above, we go from pixel locations \(p_1,~ p_2,~ p_3,~ p_4\), given by the pre-computed line equations, to \(3D\) points \(P_1,~ P_2,~ P_3,~ P_4\) in the camera’s coordinate system. The last step is to define the plane with it’s normal:

\[\hat{n} = (P_3-P_4) \times (P_1-P_2)\]Thus the plane is the set of points \(S\) where

\[S = \{P \in \mathbb{R}^3 ~|~ (P-P_1)\cdot \hat{n} = 0\}\]3. Triangulation

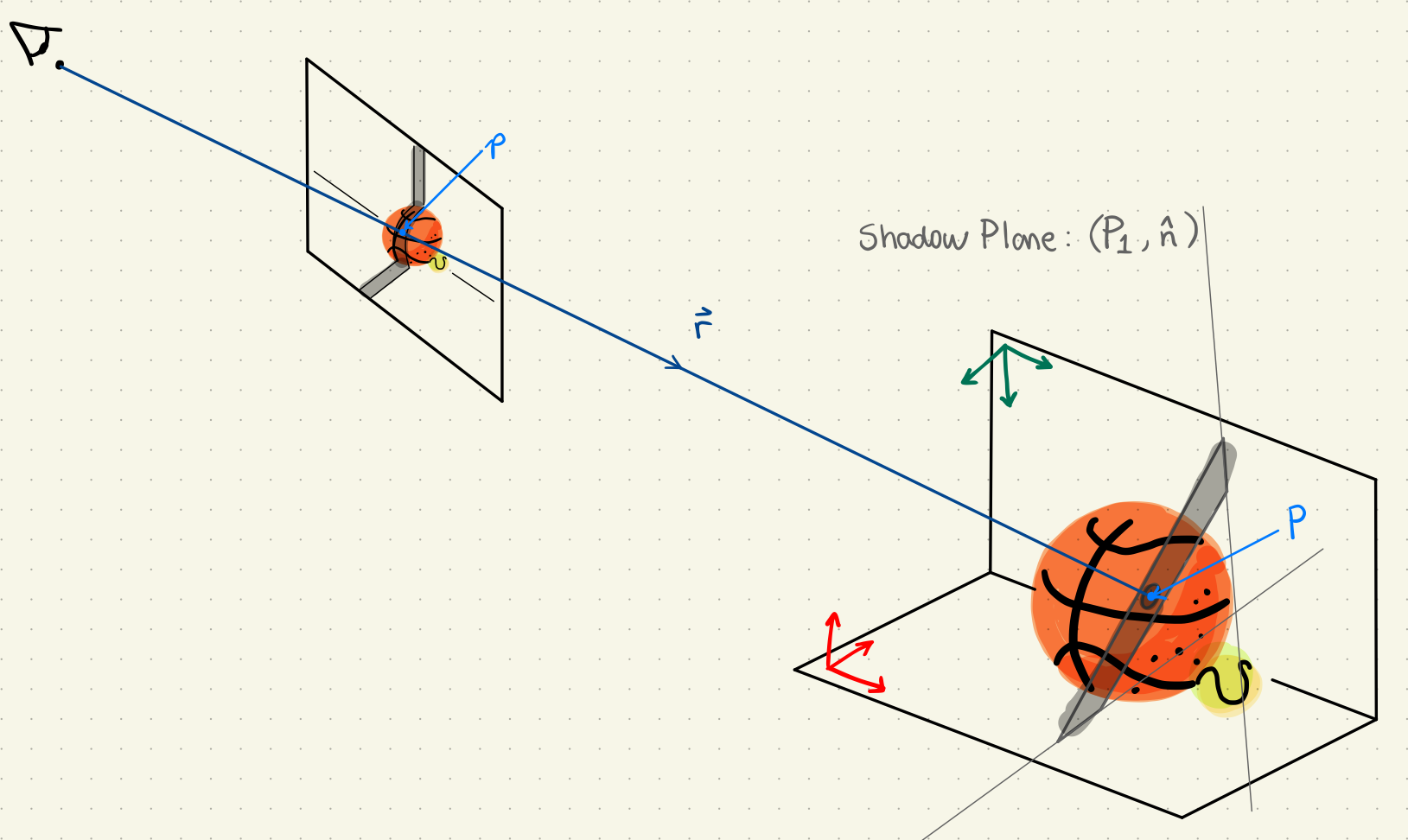

With all the data collected, we can now reconstruct the 3D surface. For each pixel \(p\):

- We know when the shadow crossed it from shadow detection, i.e. frame \(t\)

- We know the shadow plane equation for that given time \(t\), i.e. \(P_1\) and \(\hat{n}\)

- We can back-project the pixel into a ray \(\vec{r}\) with the camera’s intrinsic calibration

To get pixel’s the 3D location \(P\), all that’s left is to find the intersection between the ray \(\vec{r}\) and the shadow plane at time \(t\):

That’s it! The calibration steps are somewhat involved, but with all the information gathered, the actual triangulation is a breeze!

Results

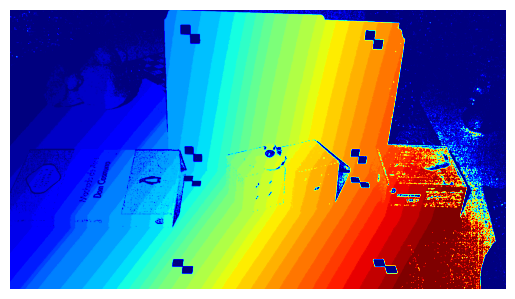

Below are some results of the scanning procedure on three different objects. To the left are the original videos used for scanning, and to the right the final results: detailed point clouds of the objects, displayed at a slight angle to facilitate observation.

Challenges, Solutions and Limitations

I would say that the major limitation of this approach is the need for careful calibration of the scene, which can be tricky to do. For a geometric calibration-free approach, check out scanning with photometry.

One interesting challenge was dealing with dark surfaces. For example, when scanning Desmondinho (our stuffed toy subject), his black eyes and nose created holes in the reconstruction because they absorbed too much light to detect the shadow crossing. This was also the case for the entire orange side of the Rubik’s cube, which in the scan is not apparent.

These and other artifacts remedied by some heuristics applied in the process, such as the thresholding for detecting the shadow crossings and to determine which points in the cloud are valid or not before scattering them.

Of course, another limitation is the geometry of the scene, which only allows for one view of the object to get propely scanned. For this reason, many professional scanning tools use rotating bases to allow for a complete scan of the object to be made.

Overall, these point clouds are far from perfect, but are still, in my opinion, very very cool. It is amazing to see what can be done just with a camera, a light source, some math and some code!

Build Your Own!

This process is super cool and you should do it if you can!

Want to build your own 3D scanner? You’ll need:

- A camera

- A checkerboard pattern for calibration

- A bright point light source (shadow lines must be sharp)

- A stick or ruler for casting shadows

The key to good results is careful calibration and controlled lighting conditions. Happy scanning! 📷