w2w++

Modelling a low-dimensional manifold of diffusion model weights with a VAE to create novel identities.

I worked on this project alongside Yining She and Yuchen Lin for our 10-423/623 (Generative AI) class at CMU. The following is a brief overview of the project. For more detail, please see our: report / poster / code / group 😁.

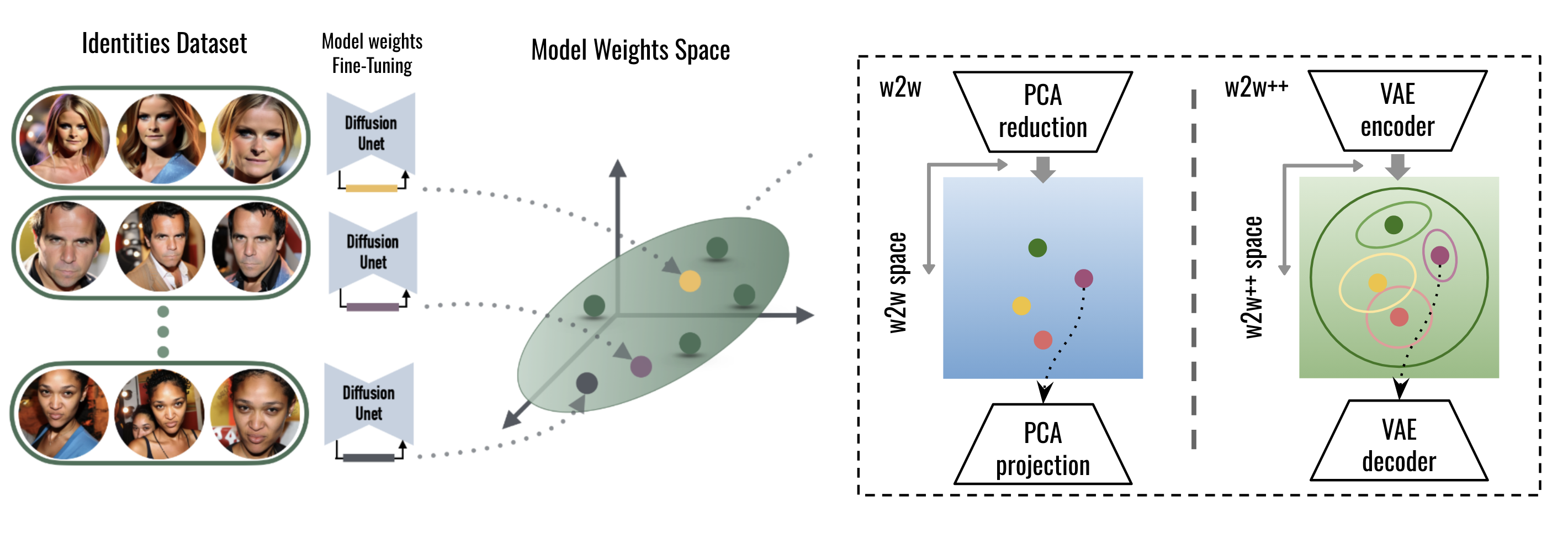

The original Weights2Weights introduced a linear subspace of identity-encoding LoRA parameters of over 65k identities. We extended this work on Weights2Weights++ by replacing their linear PCA approach with a non-linear VAE architecture:

Modelling a nonlinear subspace with a VAE

While the authors show that PCA works well, it’s fundamentally limited by being a linear method. We hypothesized that a VAE could learn a more expressive nonlinear mapping between the ~100k-dimensional weight space and a much lower-dimensional latent space (512-dimensional in our implementation).

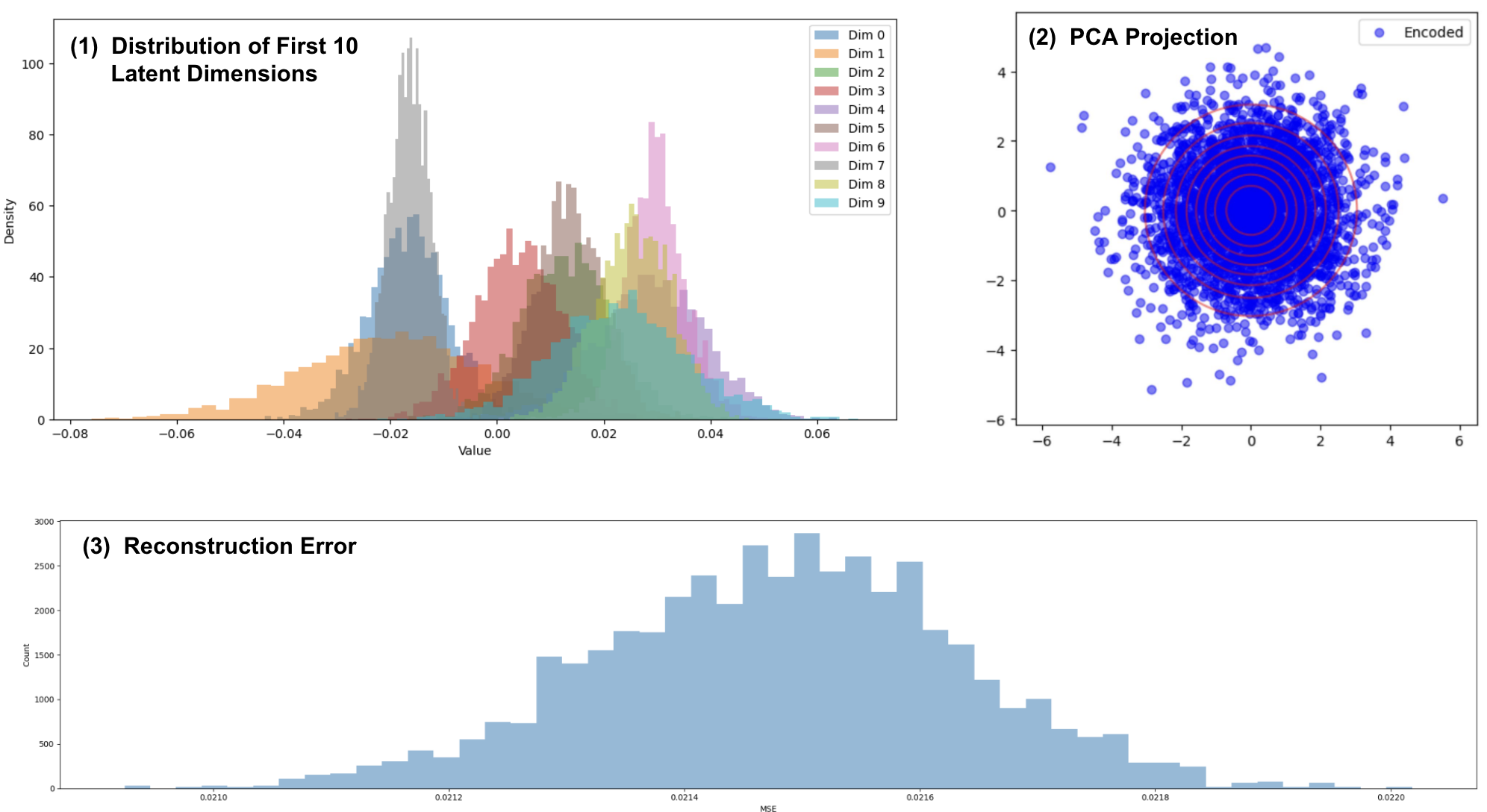

To test this hypothesis, we implemented a relatively simple VAE with \(\beta\) warmup and annealing, to allow the model to learn expressive latent representations before encouraging it to approach a zero-mean gaussian. We experimented with many architectures and training schedules before finding one that worked well:

The architecture itself is simple - the encoder progressively reduces dimensionality through linear layers with non-linear activations, while the decoder mirrors this structure in reverse. As flattened LoRA parameters hold no intrinsic structure, we did not use a more specialized architecture, though we did experiment with convolutional layers, to little success.

Sampling and Interpolating

The VAE approach shines when it comes to sampling and interpolation. Unlike PCA, where sampling is done through modelling a distribution for each basis vector’s coefficient, with a VAE we can simply sample from a standard normal distribution and decode.

Randomly sampled models are capable of generating consistent identities. Another trait of VAEs is their ability to smoothly interpolate in the latent space:

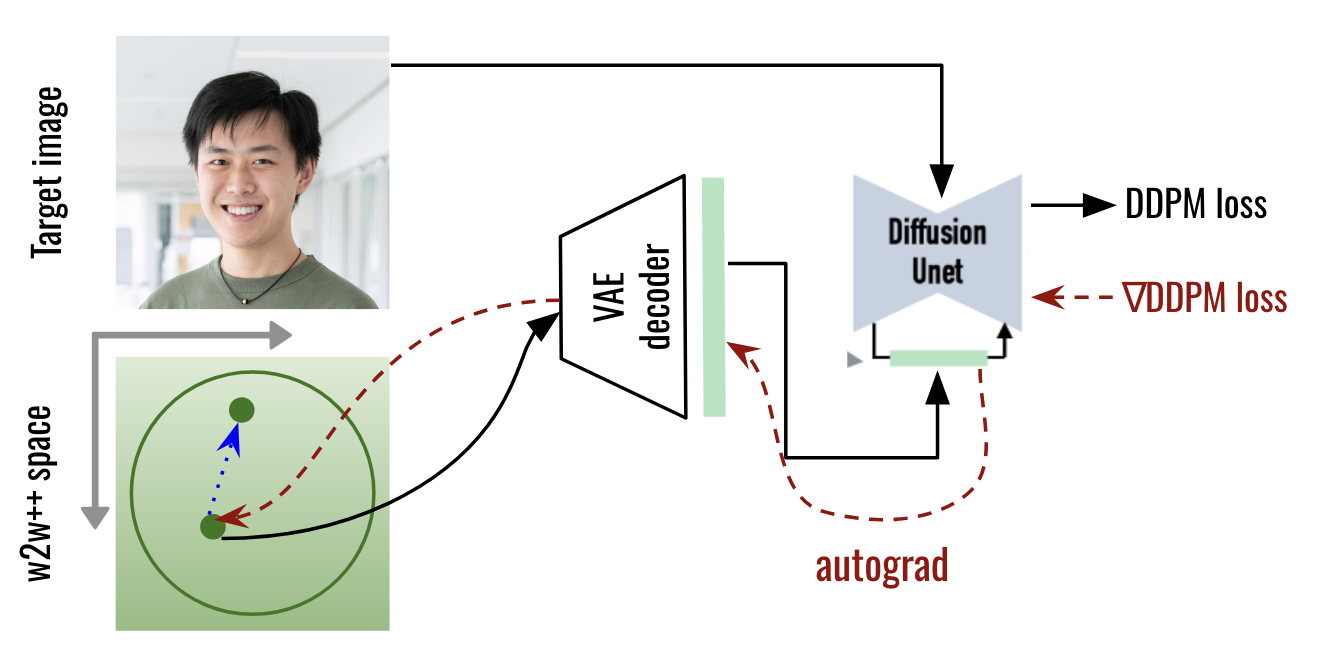

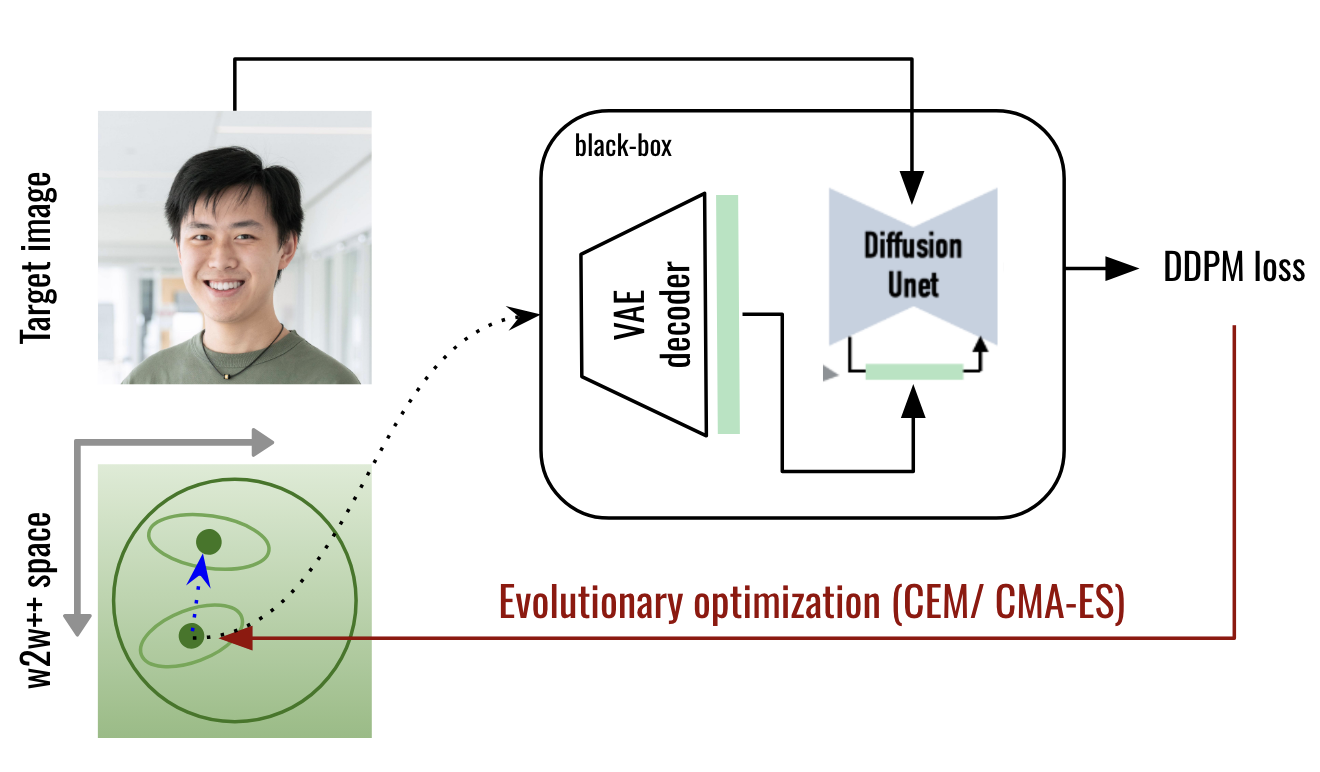

Inversion

For the challenging task of projecting new images into our weight space, we explored both gradient-based and evolutionary approaches:

Unfoutunately, we were not successful with either approach. This was most likely due to our latent space not being as smooth enough for navigating it according to the DDPM loss used in the inversion task.

Challenges

The biggest challenge in this project was getting the VAE training dynamics right. We went through several iterations:

- Initially used standard VAE training, but the model collapsed to generating similar weights

- Tried different static \(\beta\) weights for the KL loss, but none were robust

- Experimented with architectural changes like residual connections

- Finally succeeded with KL annealing, letting reconstruction dominate early training

Limitations and Future Work

While we succeeded in creating a more expressive model of the weight space, several limitations remain:

- The inversion problem needs more work, possibly requiring changes to the latent space structure

- We’re still working with a relatively small dataset of weights (~65k models)

- Our aggressive reduction in the first layer (100k → 4k) may limit capacity. More GPU memory would enable testing larger architectures

Future work could explore using more sophisticated architectures like transformers to capture weight structure, creating larger datasets, or developing new approaches to the inversion problem.