Fixing damaged phone cameras with deconvolution

Repairing a broken phone lens is expensive. Here's how I used some math and a little bit of Python to fix them for free!

This project was completed as a final project for my Computational Photography class at CMU, 15-463. You can find here the video presentation and github repo containing all the code and the report.

The Problem

The basis of the thin lens model is that a point light source maps to a single point on the sensor. However, real lenses don’t work like that. Instead, light in optical systems suffers from aberrations. These can be chromatic aberration, geometric distortion, coma, and more. Each of these effects make it so that a single point light source does not map to a single point in the sensor, effectively lowering the quality of the image in their own particular ways.

To conuter this, lens designers build complex systems with many expensive elements, which means that high quality lenses tend to be bulky and expensive. Mobile Phone lenses are no exception to this rule, and over the past decade they have grown increasingly more complex. A problem that is particular to phone cameras, though, is their harsh environment. These lenses can break at seemingly random times, turning a nicely engineered piece of glass into somehting that can only produce blurry, low quality images.

In fact, this happened to my own phone. The cost to fix my lens was too high, so instead I turned to a well-studied computational solution for this type of problem: Deconvolution.

Imaging Model

Aberrations cause a point light source to spread over a certain region of the sensor instead of at a single point. We call this the lens’ Point Spread Function (PSF). In practice, the PSF usually acts like a low-pass filter, and we can model it as a blur kernel \(k\) that is convolved with a ground truth image \(i\). Finally, we need to account for added noise due to the imaging pipeline, which we assume to be a zero-mean gaussian \(n\):

\[b = k * i + n\]where \(b\) is our blurry image that we get out of the camera. Alternatively, we can model this process in a matrix-vector form, by unrolling our kernel \(k\) into a circulant matrix \(K\) where each encodes the region of the image \(i\) being convolved with \(k\):

\[\mathbf{b} = \mathbf{K}\mathbf{i} + \mathbf{n}\]Lastly, we can write an equivalent representation of this model in the frequency domain thanks to the Convolution Theorem:

\[\mathcal{F}\{b\}= \mathcal{F}\{k\} \times \mathcal{F}\{i\} + n\]Where \(\mathcal{F}\{b\}, \mathcal{F}\{k\}, \mathcal{F}\{i\}\) are the Discrete Fourier Transforms of \(b, k\) and \(i\).

The task moving forward will be to find a best estimate \(\hat{i}\) given a blurry image \(b\) and known PSF \(k\).

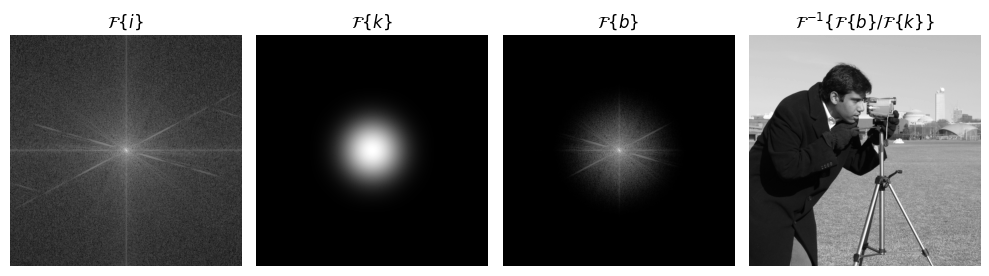

The Naïve Approach: Unfiltering

In a noise-free setting (where \(n\)=0), the simplest approach would be to try solving this in the frequency domain. Since convolution becomes multiplication in Fourier space, then deconvolution is just division of the blurry image by the PSF kernel:

\[\mathcal{F}\{b\}= \mathcal{F}\{k\} \times \mathcal{F}\{i\} \Rightarrow i = \mathcal{F}^{-1}\left\{\frac{\mathcal{F}\{b\}}{\mathcal{F}\{k\}}\right\}\]

Unfourtunately though, the noise-free assumption does not hold in the real world. Noise in the blurry image \(\mathcal{F}\{b\}\) can be divided by a potentially tiny value in \(\mathcal{F}\{k\}\), effectively amplifying the noise in the resulting recovered image \(i\):

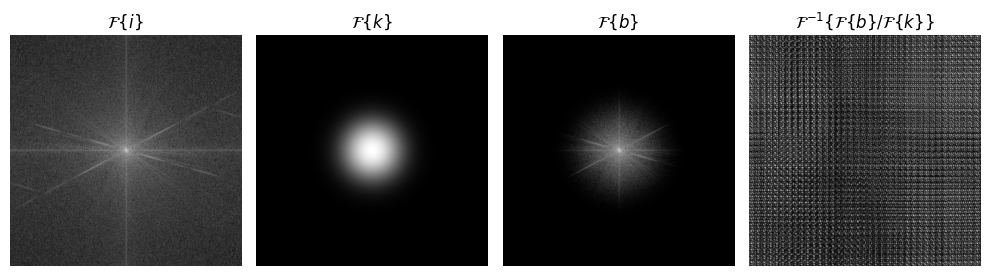

Wiener Deconvolution: A First Step

A more sophisticated approach is Wiener deconvolution, which accounts for noise by adding regularization in the frequency domain based on image statistics for the signal-to-noise (SNR) ratio for different frequencies:

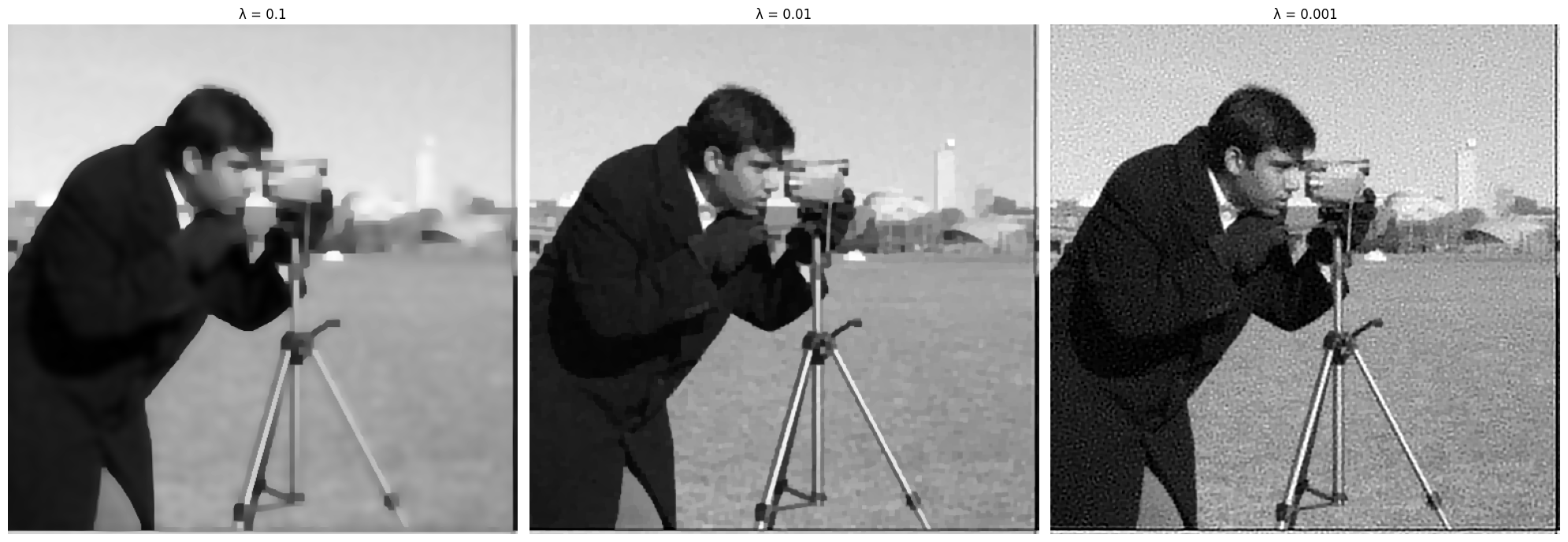

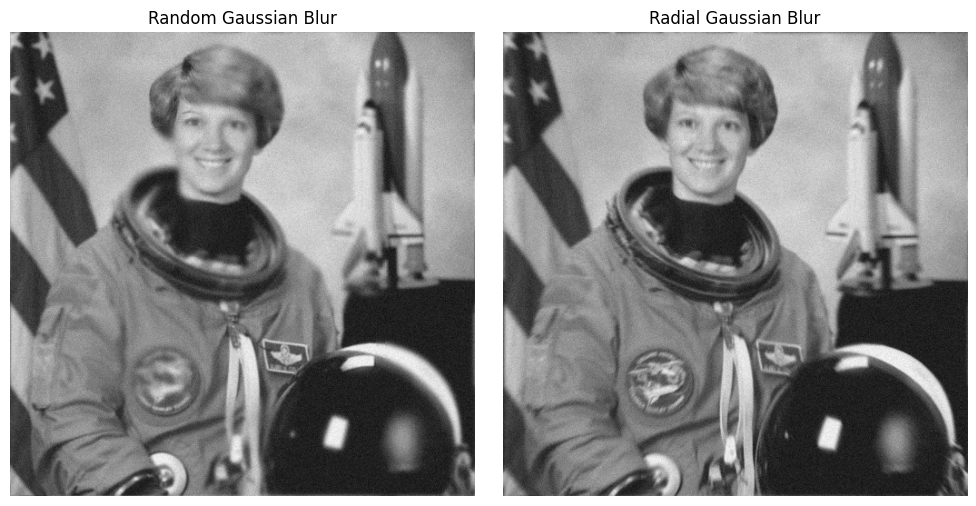

\[\hat{i} = \mathcal{F}^{-1}\left\{\frac{|\mathcal{F}\{k\}|^2}{|\mathcal{F}\{k\}|^2 + 1/SNR(\omega)}\cdot\frac{\mathcal{F}\{b\}}{\mathcal{F}\{k\}}\right\}\]Where \(SNR(\omega)\) is the signal-to-noise ratio for frequency \(\omega\), commonly approximated as \(\frac{1}{\omega^2}\). This simple change significantly improves results where noise is present:

However, there are still many artifacts around the image that don’t make a lot of sense, such as the texture in the sky, which should be smooth. As we vary how much the regularizing term is weighed, we trade off detail for smoothness. To improve upon this, we need to use a more natural form of regularization.

Total Variation Regularizer

Natural images tend to be mostly smooth with occasional sharp edges (object boundaries, horizon lines, etc.). We can use this knowledge to improve our deconvolution by adding what’s called a Total Variation (TV) regularizer. The math looks like this:

\[\hat{i} = \mathop{\mathrm{argmin}}\limits_{x}\|Kx-b\|_2^2 + \lambda\|\nabla x\|_1\]This equation has two parts:

- Data Fidelity: \(\|Kx-b\|_2^2\) ensures our solution matches the blurry input when re-blurred

- TV Regularizer: \(\lambda\|\nabla x\|_1\) encourages smooth regions while preserving sharp edges

The parameter \(\lambda\) controls how much smoothing we want - larger values produce smoother results, while smaller values give more weight to the reconstruction of \(b\). Considering that \(K\) is \(N \times N\), where \(N\) is the number of pixels in the image, this system can be intractable to solve conventionally. Thankfully, there are other ways to do this.

ADMM-TV

The Alternating Direction Method of Multipliers (ADMM) is a general algorithm for solving minimization problems in the form of

\[\begin{align*} \text{minimize}~~ &f(x) + g(z)\\ \text{subject to}~ &Ax + Bz = c \end{align*}\]The ADMM algorithm is used to solve problems in this form iteratively and efficiently by introducing a dual variable \(y\) that splits \(f(x)\) and \(g(z)\) into independent problems. This strucutre is usually exploited by formulating \(f\) and \(g\) such that their proximal operators are computationally cheap, allowing for low complexity operations within the iterative loop.

To solve the TV deconvolution problem, we build a system as

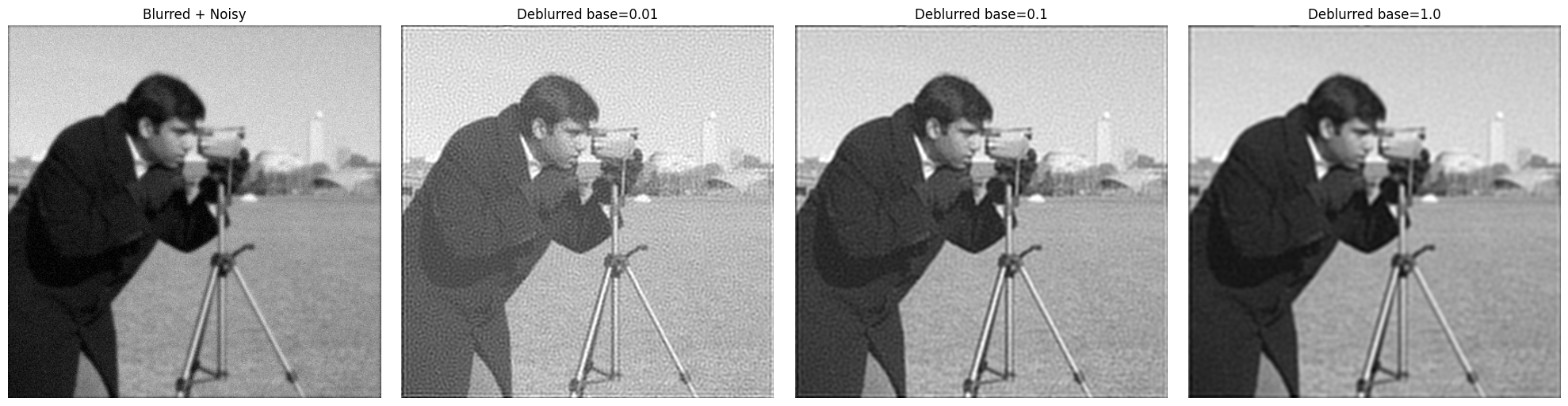

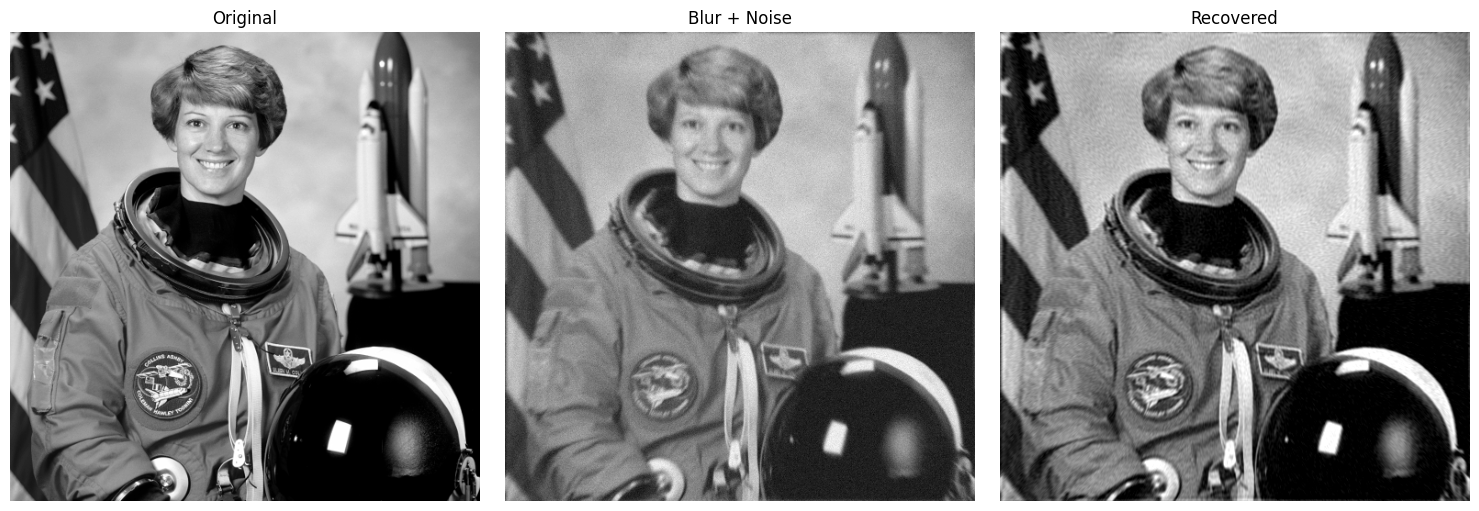

\[f(x) = \frac{1}{2}\|Kx - b\|_2^2,~ ~g(z) = \lambda \|z \|_1\\\] \[A = \nabla, B=-I, c=0\]Where \(\nabla\) is the matrix representation of the finite-differences operation. As such, minimizing this system in the ADMM sense will yield a deconvolved image with the TV regularizer taken into account, and we call this approach ADMM-TV. For more detail on the algorithm, please see my formal report. Here are some results from using ADMM-TV:

Compared to the Wiener Deconvolution approach, the TV regularizer works well to smooth out regions of the image we expect to be noise-free, such sas the sky, for example. While some detail is retrieved, finding an appropriate value for λ can be pretty tricky and vary depending on the scene:

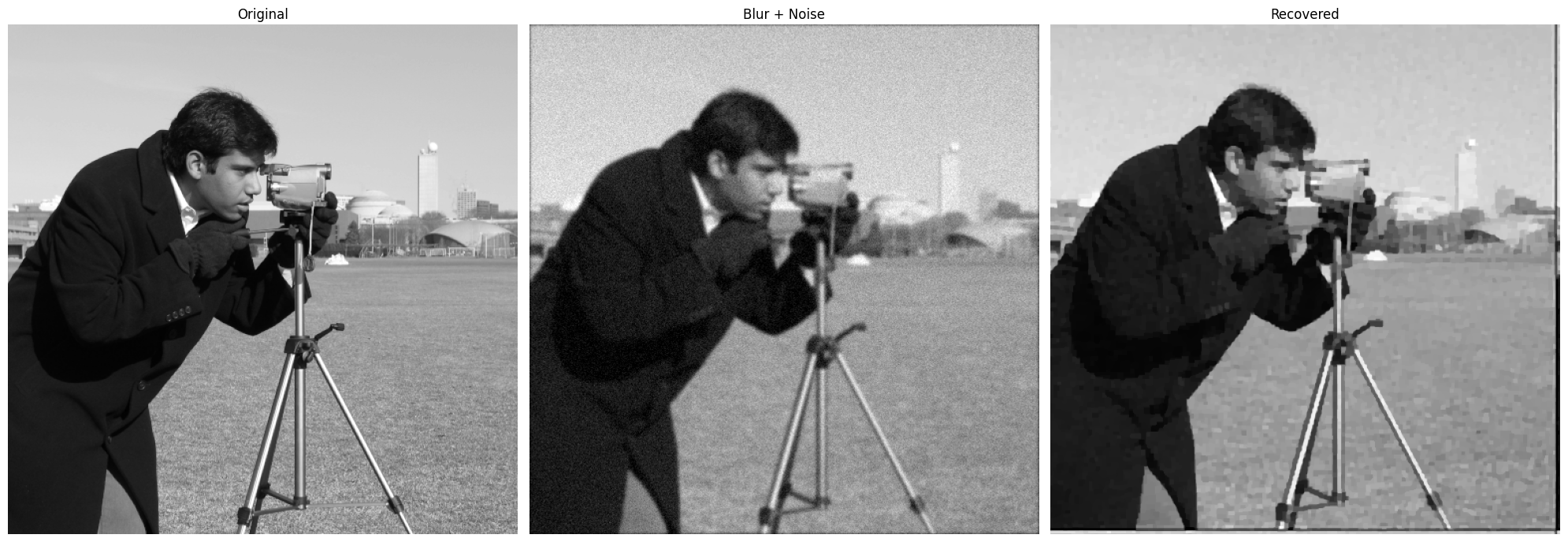

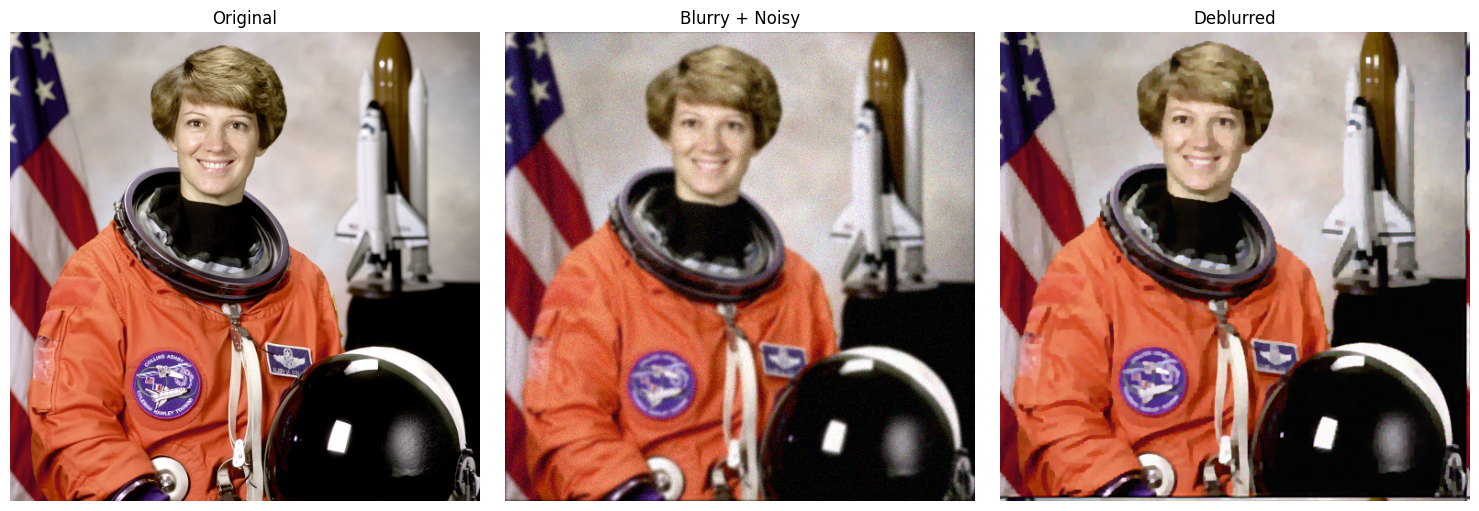

As expected, a higher value of λ causes the image to be smoother, but less detailed, while a smaller λ retrieves a lot of the detail but is sensitive to noise. We can also de-noise color images by performing deconvolution on each channel independently:

Nonlocal PSFs

One big limitation of the ADMM-TV algorithm presented above is its assumption that a lens’s PSF is constant over the entire image. This is what enables the algorithm to efficiently iterate, as the proximal operator for retrieving \(x\) relies on an unfiltering operation in the frequency domain.

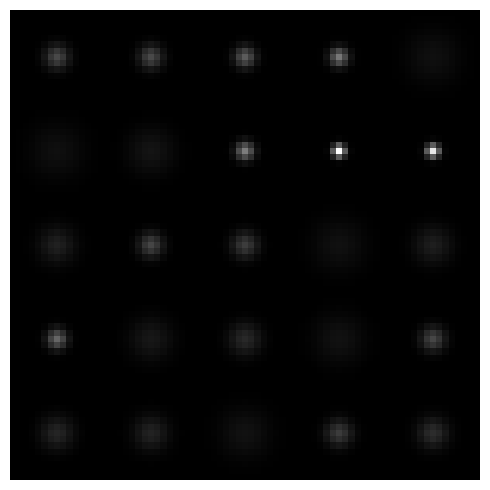

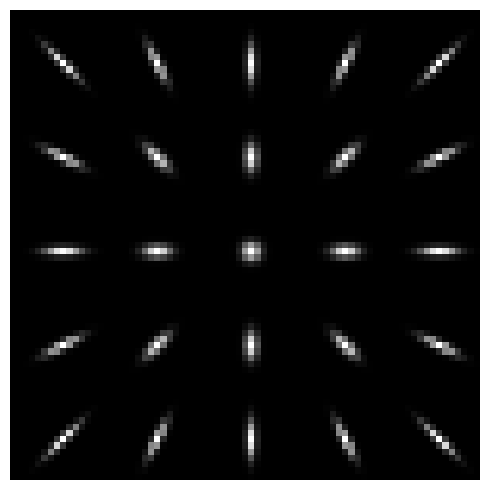

However, not all lenses are accurately modelled by a single PSF, some might be better modelled by many locally-invariant PSFs:

To formalize this, we use the Nagy-O’Leary model, where a spatially-varying PSF is represented as a sum of local kernels, weighed at each pixel in the image.

\[K = \sum_{p=1}^{P} U_p K_p\]where

- \(P\) is the number of patches in the image

- \(K_p\) is the local PSF for patch \(p\)

- \(U_p\) is a nonnegative diagonal matrix weighing how each pixel tends to kernel \(K_p\), and \(\sum_{p=1}^P U_p = I\)

Following this formulation, we can blur images according to a spatially-varying PSF:

DR-TV

The Douglas-Rachford Splitting algorithm (DR) is another powerful optimization method that solves problems in the form of

\[\begin{align*} \text{minimize}~~ &f(x) + g(z)\\ \text{subject to}~ &Ax = z \end{align*}\]Like ADMM, DR introduces a dual variable \(y\) to split the optimization into simpler subproblems. However, DR has a slightly different structure that can be advantageous for certain applications, particularly when dealing with multiple splitting terms.

For spatially-varying PSF deconvolution with the TV regularizer, we follow the Nagy-O’Leary formulation where the PSF kernel \(K\) is represented as a sum of local kernels: \(K = \sum_{p=1}^P U_pK_p\). Here, each \(K_p\) represents a constant PSF for image patch \(p\), and \(U_p\) are diagonal matrices that weight the influence of each kernel.

This leads to a DR formulation where:

\[f(x) = 0\] \[g(z_1,\ldots,z_P, w) = \frac{1}{2}\|U_1z_1 + \cdots + U_Pz_P - b\|_2^2 + \lambda\|w\|_1\\\] \[A = [K_1, \ldots, K_P, \nabla]\]The key insight is that this formulation allows the fidelity term to be split into smaller parts while maintaining the TV regularization through the gradient term \(\nabla\). When solved, this system minimizes the original spatially-varying deconvolution problem:

\[0 + g(Ax) = \frac{1}{2}\|U_1K_1x + \ldots + U_PK_Px-b\|_2^2 = \frac{1}{2}\|Kx-b\|_2^2 + \lambda\|\nabla x\|\]With DR-TV, we get better than linear speedups compared to a naïve implementation with multiple kernels. For a grid of 25 total kernels, the processing time on an M1 CPU was approximately 8 times that of a single-kernel ADMM-TV run with same hyperparameters.

Real-World phone camera results

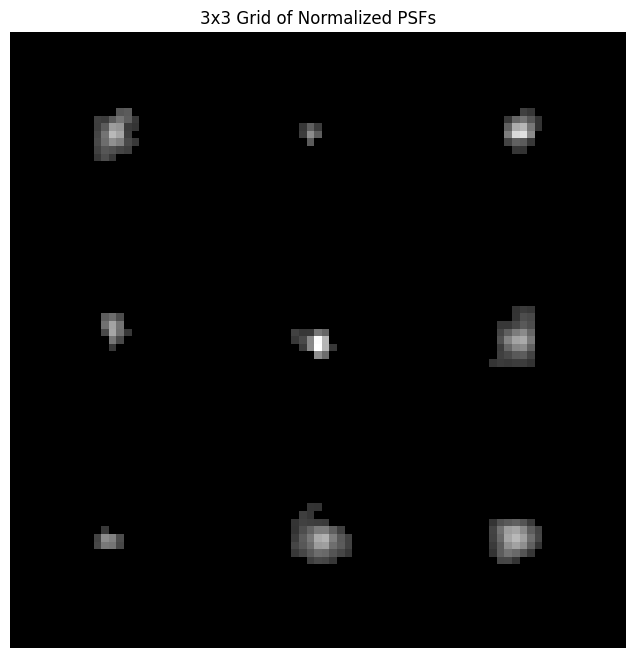

For this, I used a point light source and linear stage to measure my Phone’s PSF at 9 square patches of the camera’s field of view. I collected the following spatially-varying PSF:

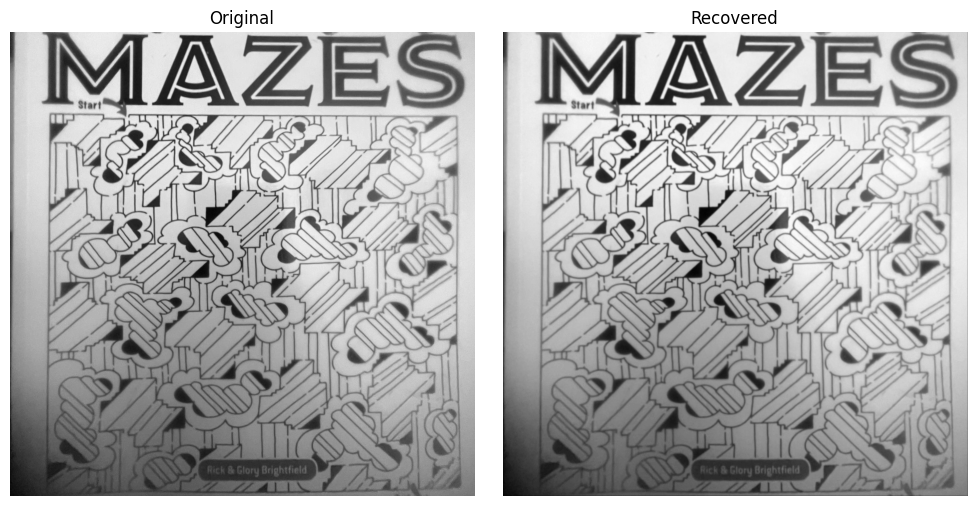

Using this PSF with the DR-TV algorithm and a blurry image captured with my phone’s camera:

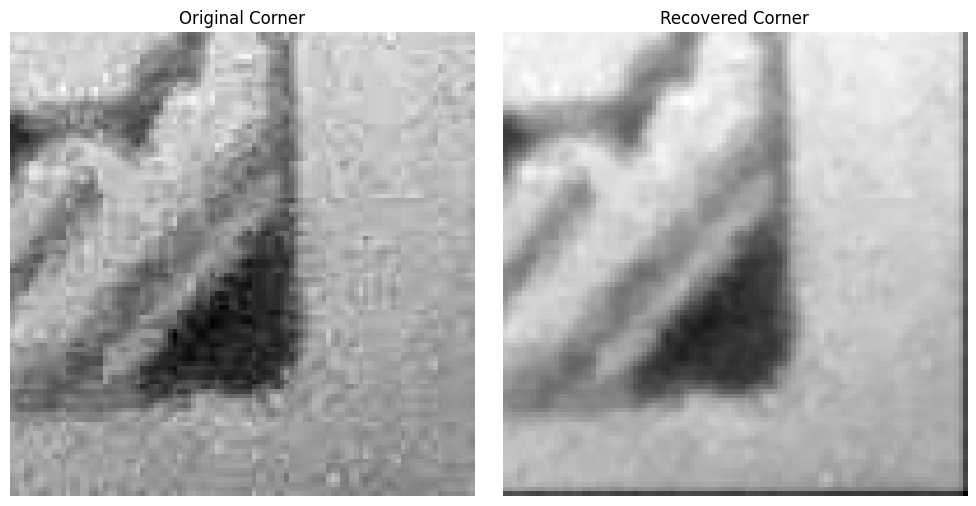

At a first glance, the effects of deconvolution aren’t very noticeable. This is likely due to an innacurate PSF measurement or suboptimal hyperparameters. Taking a closer look at the corners, where the blur is strongest, however, indicates an improved image with less noise:

Limitations and Future Work

While the results are promising, several challenges remain:

-

Color and Chromatic Aberration: The current implementation processes each color channel independently, which doesn’t account for wavelength-dependent blur (chromatic aberration) that’s especially common in simple or damaged lenses.

-

PSF Calibration: Manual PSF measurement is time-consuming and requires specialized equipment. An automated method to estimate spatially-varying PSF directly from blurry images would make the technique more practical.

-

Computational Cost: The iterative nature of the algorithms, especially DR-TV, makes real-time processing challenging. Exploring parallel implementations or more efficient optimization techniques could enable real-time applications.

-

Blind Deconvolution: The current approach assumes we know the PSF. For commercial applications, blind deconvolution approaches that can estimate the PSF from the blurry image itself might be more appropriate.

Looking Forward

Recent advances in deep learning offer exciting possibilities for improving this work:

- Neural networks could help estimate spatially-varying PSFs directly from blurry images

- Learning-based regularizers could replace TV for better detail preservation

- Network architectures could be designed to handle chromatic aberration naturally

The code for this project is available on GitHub, including implementations of Wiener, ADMM-TV, and DR-TV deconvolution algorithms.